How Advances in Data Analytics are Shaping Research

In today’s fast-paced world, the way we conduct research is undergoing a radical transformation, thanks to the incredible advancements in data analytics. Imagine being able to sift through mountains of data in mere seconds, uncovering insights that would have taken months, if not years, to find using traditional methods. This isn't just a dream—it's the reality that researchers across various fields are experiencing as they harness the power of data analytics. From healthcare to social sciences, the impact is profound, reshaping methodologies, enhancing data interpretation, and revolutionizing decision-making processes.

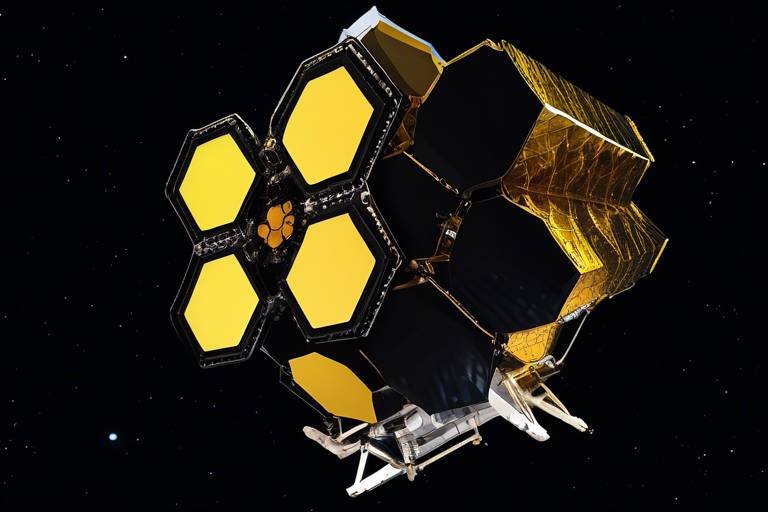

The integration of data analytics into research is akin to giving researchers a powerful telescope, allowing them to see beyond the immediate horizon. With the ability to analyze vast datasets, researchers can identify patterns and trends that were previously invisible. This capability not only enhances the accuracy of their findings but also accelerates the pace of discovery. For instance, in medical research, data analytics can help identify potential health risks in populations, enabling preventative measures that save lives.

Moreover, the ongoing evolution of data analytics tools means that researchers are continually equipped with cutting-edge technologies that enhance their capabilities. Think of it as upgrading from a basic calculator to a sophisticated computer that can not only crunch numbers but also predict future outcomes based on historical data. This shift is not just about speed; it's about unlocking new avenues of inquiry and fostering innovative approaches to solving complex problems.

As we delve deeper into the transformative impact of data analytics, it’s essential to recognize that this is not merely a trend—it’s a fundamental shift in how research is conducted. The implications are vast, stretching across disciplines and industries. Researchers are now able to make data-driven decisions with confidence, paving the way for new discoveries that can lead to groundbreaking advancements. For example, in environmental studies, data analytics can help track climate change patterns, guiding policy decisions that affect our planet's future.

In conclusion, the advances in data analytics are not just shaping research; they are redefining it. As we continue to explore the depths of what data can reveal, the potential for innovation and discovery is limitless. The future of research is bright, fueled by the insights gained from data analytics, and we are only beginning to scratch the surface of what is possible.

- What is data analytics? Data analytics is the process of examining datasets to draw conclusions about the information they contain, often with the aid of specialized systems and software.

- How does data analytics improve research? It enhances research by allowing for faster data processing, uncovering hidden patterns, and enabling more informed decision-making.

- What are some challenges of implementing data analytics in research? Common challenges include data privacy concerns, ensuring data quality, and the need for specialized skills to analyze data effectively.

- What role does machine learning play in data analytics? Machine learning algorithms help in predicting outcomes and identifying patterns within data, making analyses more efficient and accurate.

The Evolution of Data Analytics in Research

Data analytics has come a long way since its inception, evolving from simple statistical methods to sophisticated algorithms that drive modern research. In the early days, researchers relied heavily on basic statistical techniques to analyze small datasets. This approach, while effective for its time, often lacked the depth and nuance required to uncover complex patterns and insights. Fast forward to today, and we find ourselves in a world where data analytics is not just a tool but a fundamental component of research across various disciplines.

The journey of data analytics can be traced back to the late 20th century when computers began to revolutionize the way data was processed. Initially, researchers used software like SPSS and SAS to perform statistical analyses. These tools allowed for more complex calculations and the ability to handle larger datasets. However, the true explosion in data analytics came with the advent of the internet and the digital age, which led to an unprecedented increase in data generation. Suddenly, researchers had access to vast amounts of information, but they needed better ways to analyze it.

As technology progressed, the introduction of big data and machine learning transformed the landscape of research. Researchers began to realize that traditional statistical methods were insufficient for analyzing the massive volumes of data being generated. This realization led to the development of new methodologies capable of handling and interpreting big data. The rise of machine learning algorithms allowed researchers to go beyond mere correlation and delve into predictive analytics, where they could forecast trends and outcomes based on historical data.

Moreover, the integration of artificial intelligence (AI) into data analytics has further enhanced research capabilities. AI technologies enable researchers to automate data processing, identify patterns, and derive insights at a speed and accuracy that was previously unimaginable. For instance, in fields like healthcare, AI-driven analytics can sift through thousands of patient records to identify risk factors for diseases, ultimately aiding in preventative measures and treatment plans.

Today, data analytics is not just about crunching numbers; it's about telling stories through data. Researchers are now equipped with tools that allow them to visualize complex datasets, making it easier to communicate findings to a broader audience. This shift towards data storytelling has made research more accessible and engaging, encouraging collaboration across disciplines.

As we look to the future, it’s clear that data analytics will continue to evolve. With advancements in technology and methodologies, researchers will be able to uncover insights that were once thought to be unattainable. The evolution of data analytics in research is a testament to the power of innovation and the relentless pursuit of knowledge.

- What is data analytics? Data analytics refers to the process of examining datasets to draw conclusions about the information they contain, often using specialized systems and software.

- How has data analytics changed research? Data analytics has transformed research by enabling more sophisticated analysis of large datasets, leading to deeper insights and more accurate predictions.

- What technologies are essential for data analytics? Key technologies include machine learning, artificial intelligence, and big data frameworks, which facilitate the efficient processing and analysis of vast amounts of information.

- What are the challenges of data analytics in research? Challenges include data privacy concerns, ensuring data quality, and the need for researchers to possess specialized analytical skills.

Key Technologies Driving Data Analytics

In the rapidly evolving landscape of research, data analytics has become a cornerstone for driving innovation and discovery. The key technologies that fuel this transformation are not just buzzwords; they represent the very fabric of modern research methodologies. From machine learning to big data, these technologies are redefining how researchers gather, analyze, and interpret data.

At the forefront of these advancements is machine learning, a branch of artificial intelligence that enables systems to learn from data and improve their performance over time without being explicitly programmed. Imagine teaching a child to recognize animals by showing them countless pictures; similarly, machine learning algorithms can analyze vast datasets to identify patterns and make predictions. This capability is invaluable in research, where outcomes can often be uncertain. For instance, in medical research, machine learning can help predict patient responses to treatments by analyzing historical data, ultimately leading to more personalized healthcare solutions.

Another significant player in the data analytics arena is big data. This term refers to the massive volumes of structured and unstructured data generated every second across various platforms. Traditional data analysis methods often struggle to keep up with the sheer scale of big data. However, with advanced analytics tools, researchers can now sift through these enormous datasets to uncover insights that were previously hidden. For example, in climate research, analyzing big data allows scientists to identify trends and anomalies that could indicate shifts in global weather patterns. This capability not only enhances the accuracy of research findings but also informs policy decisions and strategic planning.

Furthermore, we cannot overlook the role of artificial intelligence (AI). AI technologies are increasingly being integrated into research processes, enhancing the ability to analyze complex datasets quickly and efficiently. By automating routine tasks, AI frees up researchers to focus on more critical aspects of their work, fostering creativity and innovation. For instance, AI-powered analytics can help in the rapid processing of survey data, providing researchers with immediate insights that can shape their studies in real-time.

Natural language processing (NLP) is another exciting technology that is revolutionizing data analytics in research. This branch of AI focuses on the interaction between computers and human language, allowing researchers to analyze vast amounts of textual data. Whether it's academic papers, social media posts, or survey responses, NLP tools can extract meaningful information, sentiment analysis, and trends from unstructured text. This capability is particularly useful in fields like social sciences, where understanding public sentiment can significantly impact research outcomes.

In summary, the convergence of these technologies—machine learning, big data, AI, and natural language processing—has created a powerful toolkit for researchers. By leveraging these advancements, researchers can enhance their efficiency, accuracy, and ultimately, the impact of their work. The future of research is undoubtedly intertwined with these technologies, paving the way for groundbreaking discoveries and innovations.

Machine Learning Applications

In the realm of research, machine learning has emerged as a game-changer, revolutionizing how we analyze data and draw conclusions. Imagine a world where researchers no longer wade through mountains of data manually; instead, they leverage algorithms that can learn and adapt, making sense of complex datasets in a fraction of the time. This capability allows researchers to focus on what truly matters: interpreting results and making impactful decisions.

One of the most exciting applications of machine learning in research is its ability to predict outcomes. For instance, in fields such as healthcare, machine learning models can analyze patient data to forecast disease progression and treatment responses. By identifying patterns that might be invisible to the human eye, these models enable researchers to tailor interventions to individual patients, enhancing the effectiveness of treatments and improving overall patient care.

Moreover, machine learning isn't just about predictions; it's also about enhancing data analysis. Researchers can utilize clustering algorithms to group similar data points, revealing hidden relationships and trends. For example, in environmental science, researchers might analyze climate data to identify patterns correlating with specific weather events. This can lead to better understanding and forecasting of climate change impacts, ultimately guiding policy decisions and conservation efforts.

Furthermore, machine learning can streamline the research process by automating repetitive tasks. Tasks such as data cleaning, preprocessing, and even preliminary analysis can be handled by machine learning algorithms, freeing up researchers to focus on more creative and strategic aspects of their projects. This is particularly valuable in fields like social sciences, where qualitative data can be voluminous and complex. By employing natural language processing (NLP) techniques, researchers can sift through vast amounts of textual data, extracting meaningful insights that would otherwise take weeks or months to analyze manually.

To illustrate the power of machine learning in research, consider the following table that summarizes key applications across different fields:

| Field | Application | Impact |

|---|---|---|

| Healthcare | Predictive modeling for disease outcomes | Improved patient treatment plans |

| Environmental Science | Climate pattern analysis | Better forecasting of climate events |

| Social Sciences | Textual data analysis using NLP | Enhanced understanding of social trends |

| Finance | Fraud detection algorithms | Increased security and trust in transactions |

As we can see, the applications of machine learning in research are vast and varied. Each application not only enhances the efficiency of research processes but also contributes significantly to the accuracy of findings. The ability to analyze large datasets and identify patterns is akin to having a superpower in the research world—one that can lead to groundbreaking discoveries and innovations.

Looking ahead, it's clear that the integration of machine learning into research methodologies will only deepen. As algorithms become more sophisticated and data continues to grow exponentially, researchers will be equipped with tools that can provide insights previously thought impossible. This not only paves the way for new discoveries but also challenges researchers to think critically about the implications of their findings in a world increasingly driven by data.

Q: How does machine learning improve research outcomes?

A: Machine learning enhances research outcomes by enabling faster data analysis, predicting trends, and uncovering hidden patterns that inform decision-making.

Q: What are some common machine learning algorithms used in research?

A: Common algorithms include regression analysis, decision trees, clustering algorithms, and neural networks, each serving different purposes depending on the research goals.

Q: Is machine learning accessible to all researchers?

A: While some knowledge of programming and statistics is beneficial, many tools and platforms are becoming increasingly user-friendly, making machine learning more accessible to researchers across various disciplines.

Predictive Analytics in Research

Predictive analytics is like having a crystal ball for researchers, allowing them to foresee trends and outcomes based on historical data. Imagine being able to predict the next big breakthrough in medicine or the next popular trend in consumer behavior! This powerful tool leverages statistical algorithms and machine learning techniques to analyze current and historical facts, enabling researchers to make informed decisions and strategies. It’s not just about crunching numbers; it’s about turning data into actionable insights that can change the course of research.

One of the standout features of predictive analytics is its ability to identify patterns that may not be immediately visible to the naked eye. For instance, in the field of healthcare, predictive models can analyze patient data to forecast disease outbreaks or treatment outcomes. By examining various factors—such as demographics, previous health records, and even environmental influences—researchers can anticipate future health crises and allocate resources more effectively.

Moreover, predictive analytics can significantly enhance the accuracy of research conclusions. By utilizing complex algorithms, researchers can test various scenarios and outcomes, leading to a deeper understanding of their data. This not only boosts confidence in their findings but also helps in crafting more robust hypotheses for further investigation. In a world where data is abundant, the ability to sift through it and draw meaningful conclusions is invaluable.

To illustrate the impact of predictive analytics, consider a study conducted in the field of climate science. Researchers used predictive models to analyze historical weather patterns, which allowed them to forecast future climate conditions with remarkable accuracy. This not only informed policy decisions but also helped communities prepare for potential natural disasters. The implications of such predictive capabilities are profound, affecting everything from urban planning to disaster response.

In summary, predictive analytics is revolutionizing research methodologies across various fields. By enabling researchers to anticipate outcomes and make data-driven decisions, it enhances both the efficiency and effectiveness of research efforts. As we continue to refine these techniques and incorporate more sophisticated technologies, the future of research looks incredibly promising.

- What is predictive analytics? Predictive analytics involves using historical data and statistical algorithms to forecast future outcomes, helping researchers make informed decisions.

- How is predictive analytics used in research? It is used to identify patterns, forecast trends, and enhance the accuracy of research conclusions across various fields, including healthcare, climate science, and marketing.

- What are some benefits of using predictive analytics? Benefits include improved decision-making, increased efficiency, and the ability to anticipate trends and outcomes that can lead to significant breakthroughs.

- What challenges are associated with predictive analytics? Challenges include data quality issues, the need for specialized skills, and ethical considerations regarding data privacy.

Natural Language Processing

Natural Language Processing, or NLP, is revolutionizing the way researchers interact with textual data. Imagine trying to sift through mountains of documents, articles, and reports to find that one crucial piece of information. It sounds daunting, right? Well, that’s where NLP steps in like a superhero, making the process not just manageable, but incredibly efficient. By leveraging advanced algorithms and machine learning techniques, NLP allows researchers to analyze and interpret vast amounts of unstructured data, transforming it into structured insights that can drive meaningful conclusions.

One of the most remarkable aspects of NLP is its ability to understand context and sentiment. For instance, when researchers are analyzing social media data or customer feedback, they can utilize NLP to gauge public opinion or sentiment towards a product or service. This capability enables researchers to not only extract factual information but also understand the underlying emotions and attitudes present in the text. Think of it as having a conversation with the data, where the nuances of language are understood, leading to richer insights.

Moreover, NLP encompasses various techniques that enhance data extraction and interpretation. Some of these techniques include:

- Tokenization: Breaking down text into individual words or phrases, making it easier to analyze.

- Named Entity Recognition (NER): Identifying and classifying key entities in the text, such as names, organizations, and locations.

- Sentiment Analysis: Determining the emotional tone behind a series of words, helping researchers understand public sentiment.

These techniques not only save time but also increase the accuracy of research findings. For example, in healthcare research, NLP can be used to analyze patient records, extracting critical information that can lead to improved patient outcomes. By automating the tedious process of data extraction, researchers can focus on what truly matters—drawing conclusions and making impactful decisions based on their findings.

As we look to the future, the potential of NLP in research is immense. The continuous advancements in machine learning and artificial intelligence are paving the way for even more sophisticated NLP applications. Researchers can expect to see improvements in language models that can understand and generate human-like text, making the interaction between humans and machines more seamless than ever before.

In conclusion, NLP is not just a tool; it’s a game-changer in the realm of research. By enabling researchers to harness the power of language, it opens up new avenues for exploration and discovery. As we continue to navigate the complexities of data, NLP will undoubtedly play a pivotal role in shaping the future of research methodologies and outcomes.

- What is Natural Language Processing?

NLP is a field of artificial intelligence that focuses on the interaction between computers and humans through natural language, enabling machines to understand, interpret, and generate human language. - How is NLP used in research?

NLP is used in research to analyze large volumes of text data, extract meaningful insights, and understand sentiment, which can aid in decision-making and enhancing research outcomes. - What are the benefits of using NLP in research?

The benefits include increased efficiency in data analysis, improved accuracy in extracting insights, and the ability to understand complex language patterns and sentiments.

Big Data's Role in Research

In today's fast-paced world, big data has emerged as a game-changer in the research landscape, revolutionizing how we gather, analyze, and interpret information. Gone are the days when researchers relied solely on small sample sizes and limited datasets. With the advent of big data, researchers can now harness vast amounts of information from various sources, leading to insights that were previously unimaginable. Imagine trying to solve a complex puzzle with just a few pieces; that’s what traditional research methods often felt like. Now, with big data, it's like having the entire puzzle laid out before you, allowing for a clearer and more comprehensive picture.

Big data encompasses a multitude of data types, including structured, unstructured, and semi-structured data. This variety enables researchers to explore diverse fields such as healthcare, social sciences, and environmental studies with unprecedented depth. For instance, in healthcare research, data from electronic health records, wearable devices, and social media can be analyzed to identify trends in patient behavior, treatment efficacy, and even disease outbreaks. The ability to analyze such a rich tapestry of information not only enhances the accuracy of findings but also opens up new avenues for discovery.

Moreover, the integration of big data analytics tools has streamlined the research process significantly. Researchers can now utilize advanced algorithms and software to sift through massive datasets quickly. This not only saves time but also allows for real-time data analysis, which is crucial in fields where conditions can change rapidly, such as epidemiology. The speed at which researchers can now obtain insights means that decisions can be made proactively, rather than reactively, leading to more effective interventions and solutions.

However, the implementation of big data in research is not without its challenges. Researchers must grapple with issues related to data management, storage, and processing capabilities. As datasets grow, so do the demands on technology and infrastructure. It’s akin to trying to fill an ever-expanding swimming pool; without the right equipment, it can quickly become overwhelming. To address these challenges, many institutions are investing in robust data management systems and cloud-based solutions that can handle large volumes of data efficiently.

Furthermore, the ethical implications of using big data in research cannot be overlooked. As researchers delve into vast datasets, they must remain vigilant about data privacy and the ethical use of information. Establishing clear protocols for data collection, usage, and sharing is essential to maintain the trust of participants and the integrity of the research. It’s like walking a tightrope; one misstep could lead to significant repercussions, not just for the research team but for the entire field.

In summary, big data is reshaping the research landscape by providing researchers with the tools to analyze and interpret information on an unprecedented scale. While challenges remain, the potential benefits far outweigh the drawbacks. As technology continues to advance and methodologies evolve, we can expect big data to play an even more significant role in future research endeavors, paving the way for groundbreaking discoveries and innovations.

- What is big data? Big data refers to extremely large datasets that can be analyzed computationally to reveal patterns, trends, and associations, especially relating to human behavior and interactions.

- How does big data impact research? Big data allows researchers to analyze vast amounts of information quickly, leading to more accurate findings and the ability to identify trends that were previously undetectable.

- What are the challenges of using big data in research? Challenges include data management, privacy concerns, and the need for specialized skills to analyze large datasets effectively.

- What ethical considerations are involved in big data research? Researchers must ensure data privacy, obtain consent, and adhere to legal regulations regarding data use and sharing.

Challenges in Data Analytics for Research

In the ever-evolving landscape of data analytics, researchers are navigating a myriad of challenges that can complicate their efforts. While the benefits of data analytics in enhancing research methodologies are profound, there are significant hurdles that need to be addressed. One of the most pressing challenges is data privacy. As researchers collect and analyze vast amounts of data, often containing sensitive information, they must ensure compliance with regulations such as GDPR and HIPAA. Failing to protect personal data not only jeopardizes the integrity of the research but can also lead to severe legal repercussions.

Another critical issue is the quality and integrity of data. Inaccurate or incomplete data can skew research findings, leading to erroneous conclusions. Researchers must implement rigorous data collection and cleaning processes to ensure that their datasets are reliable. This involves not only verifying the accuracy of the data but also understanding the context in which it was collected. For instance, if data is sourced from social media, researchers need to account for biases inherent in those platforms, which can distort the analysis.

Moreover, the rapid pace of technological advancement poses a challenge in terms of skill gaps. As new tools and methodologies emerge, researchers are often required to upskill or reskill to keep pace. This can be particularly daunting for those in academia who may not have access to the same resources as their counterparts in the private sector. Institutions must invest in training programs and workshops to equip researchers with the necessary skills to leverage data analytics effectively.

To further illustrate these challenges, consider the following table that outlines some of the most common obstacles faced by researchers in the realm of data analytics:

| Challenge | Description |

|---|---|

| Data Privacy | Ensuring compliance with legal regulations while handling sensitive information. |

| Data Quality | Maintaining accuracy and completeness to avoid skewed results. |

| Skill Gaps | Keeping up with rapidly evolving technologies and methodologies. |

In addition to these hurdles, researchers also face the challenge of interpretation and analysis. With the influx of data, the ability to discern meaningful patterns and insights becomes increasingly complex. Researchers must not only be adept at using analytical tools but also possess the critical thinking skills to interpret the results accurately. This can lead to a situation where the sheer volume of data overwhelms the researcher, resulting in analysis paralysis.

To navigate these challenges, collaboration is key. By working alongside data scientists and IT professionals, researchers can bridge the gap between raw data and actionable insights. This multidisciplinary approach not only enhances the quality of research but also fosters a culture of innovation. In essence, while challenges in data analytics for research are significant, they are not insurmountable. With the right strategies and support, researchers can harness the power of data analytics to drive their inquiries forward.

- What are the main challenges in data analytics for research?

The main challenges include data privacy concerns, ensuring data quality and integrity, and addressing skill gaps among researchers. - How can researchers ensure data privacy?

Researchers can ensure data privacy by complying with legal regulations, implementing data encryption, and anonymizing sensitive information. - Why is data quality important in research?

Data quality is crucial because inaccurate or incomplete data can lead to erroneous conclusions, undermining the research's credibility. - How can researchers overcome skill gaps in data analytics?

Researchers can overcome skill gaps by participating in training programs, workshops, and collaborating with data professionals.

Data Privacy and Ethics

In the age of big data, where information flows like a raging river, the importance of data privacy and ethics has never been more pronounced. As researchers harness the power of data analytics, they often find themselves at a crossroads, balancing the pursuit of knowledge with the ethical implications of their methods. With every click and every byte of data collected, questions arise: Who owns this data? How is it being used? Are individuals' rights being respected? These questions aren't just academic; they are the bedrock of responsible research practices.

At the core of data privacy is the principle that individuals should have control over their personal information. This control is vital, especially when data is being used to draw conclusions about people's lives. For instance, consider a researcher analyzing health data to identify trends in disease outbreaks. While the insights gained can lead to significant public health advancements, the researcher must tread carefully to ensure that sensitive information is anonymized and that individuals' identities remain protected. This is where ethical guidelines come into play, ensuring that data is collected and used responsibly.

Moreover, the legal landscape surrounding data privacy is continually evolving. Regulations such as the General Data Protection Regulation (GDPR) in Europe and the California Consumer Privacy Act (CCPA) in the United States have set stringent standards for how data should be handled. These laws not only protect individuals but also impose hefty penalties on organizations that fail to comply. As a result, researchers must stay informed about these regulations and integrate them into their methodologies.

Another critical aspect of data ethics involves the concept of informed consent. Before collecting data, researchers should ensure that participants are fully aware of how their information will be used. This transparency fosters trust and encourages participation, which is essential for the success of any research project. It’s like asking for permission before borrowing a friend’s favorite book; it shows respect for their ownership and privacy.

Furthermore, the issue of data bias cannot be overlooked. When datasets are skewed or unrepresentative, the conclusions drawn can perpetuate existing inequalities. For instance, if a study on healthcare outcomes predominantly includes data from one demographic group, the findings may not be applicable to others. Thus, researchers have a responsibility to ensure that their data is comprehensive and inclusive, actively seeking out diverse perspectives to enrich their findings.

To summarize, the intersection of data privacy and ethics is a complex yet crucial area for researchers. As they navigate the waters of data analytics, they must prioritize the rights and dignity of individuals while striving for innovation and advancement. By adhering to ethical practices and legal standards, researchers can contribute to a future where data is used responsibly and beneficially.

- What is data privacy? Data privacy refers to the proper handling and protection of sensitive personal information, ensuring individuals have control over their data.

- Why is ethics important in data research? Ethics are essential in data research to maintain trust, ensure compliance with laws, and protect individuals' rights.

- What are some common data privacy regulations? Common regulations include GDPR in Europe and CCPA in California, which set standards for data handling and privacy.

- How can researchers ensure informed consent? Researchers can ensure informed consent by clearly explaining how data will be used and obtaining explicit permission from participants.

Data Quality and Integrity

When it comes to research, the quality and integrity of data are paramount. Imagine setting out to build a house with faulty blueprints; the end result is bound to be unstable, right? Similarly, if researchers base their findings on poor-quality data, the conclusions drawn can lead to erroneous results and misguided decisions. This is why ensuring data quality and integrity is not just a step in the research process; it's the very foundation upon which credible research is built.

Data quality refers to the accuracy, completeness, consistency, and reliability of data. Researchers must engage in meticulous practices to collect and maintain high-quality data. This involves several steps:

- Data Collection: Ensuring that data is collected from reliable sources and using appropriate methodologies.

- Data Cleaning: Identifying and rectifying errors or inconsistencies in the dataset.

- Data Management: Implementing robust systems for storing and accessing data to prevent loss or corruption.

Additionally, data integrity—the assurance that data is authentic and unaltered—is crucial. It involves maintaining the accuracy and consistency of data over its lifecycle. Researchers often employ various strategies to uphold data integrity, including:

- Access Controls: Limiting who can modify or access data to prevent unauthorized changes.

- Audit Trails: Keeping detailed logs of data access and modifications to track changes.

- Regular Backups: Ensuring that data is regularly backed up to prevent loss in case of system failures.

In the age of big data, where vast amounts of information are generated every second, the challenge of maintaining data quality and integrity is even more pronounced. Researchers must navigate through noise and irrelevant data to extract meaningful insights. This is where advanced data analytics techniques come into play, helping to filter out the noise and highlight the valuable nuggets of information.

Moreover, the consequences of neglecting data quality and integrity can be severe. Consider the implications in fields like healthcare, where inaccurate data can lead to misdiagnoses or ineffective treatments. Or in environmental studies, where flawed data could result in misguided policies that fail to address critical issues. Therefore, researchers must adopt best practices and leverage technology to ensure their data is not just abundant but also trustworthy.

In conclusion, data quality and integrity are not mere checkboxes in the research process; they are vital components that influence the overall credibility and impact of research findings. By prioritizing these aspects, researchers can build a solid foundation for their work, leading to more reliable outcomes and greater trust from the communities they serve.

- What are the main components of data quality? Data quality encompasses accuracy, completeness, consistency, and reliability.

- Why is data integrity important? Data integrity ensures that the data remains authentic and unaltered, which is crucial for credible research.

- How can researchers maintain data integrity? Researchers can maintain data integrity through access controls, audit trails, and regular backups.

- What challenges do researchers face regarding data quality? Common challenges include data collection errors, inconsistencies, and the sheer volume of data that can introduce noise.

Future Trends in Data Analytics for Research

As we look ahead, the landscape of data analytics in research is poised for remarkable transformation. The rapid pace of technological advancements is not just a trend; it's a revolution that is fundamentally changing how researchers approach their work. Imagine a world where insights are not just derived from data but are predicted with astonishing accuracy. This is the future we’re heading towards, and it’s both exciting and daunting.

One of the most significant trends shaping the future of data analytics is the integration of artificial intelligence (AI) with traditional research methodologies. AI is no longer just a buzzword; it's becoming an essential component of research frameworks. With AI, researchers can automate data analysis, allowing them to focus on interpreting results rather than getting bogged down in the minutiae of data processing. This shift not only enhances efficiency but also opens the door to deeper insights that might have been overlooked in manual analyses.

Moreover, the rise of cloud computing is set to revolutionize how data is stored and processed. Researchers can now access vast amounts of data and powerful analytical tools from anywhere in the world. This democratization of data means that even small research teams can leverage big data analytics without the need for extensive infrastructure. Imagine a small lab in a remote location collaborating with global partners in real-time, sharing insights and data seamlessly. This collaborative potential is one of the most exciting aspects of future research.

Additionally, real-time data analytics is becoming increasingly important. In fields like healthcare, where timely decisions can save lives, the ability to analyze data as it comes in is invaluable. Researchers can monitor ongoing studies and adjust their methodologies on the fly, making their research more adaptive and responsive. This real-time capability is not just a luxury; it is becoming a necessity as the speed of change in various fields accelerates.

Another trend to watch is the increasing importance of data visualization. As data sets grow larger and more complex, the ability to present findings in a clear and visually appealing manner becomes crucial. Researchers are now using advanced visualization tools to create interactive dashboards that allow stakeholders to explore data intuitively. This not only enhances understanding but also facilitates better decision-making. Think of it as turning a complex novel into an engaging graphic novel—suddenly, the story becomes much easier to grasp!

In the realm of ethics, we are also seeing a shift. With the growing concern over data privacy, researchers are prioritizing ethical data practices. This includes not only compliance with regulations like GDPR but also a commitment to transparency and accountability in how data is collected and used. The future of research will likely see a stronger emphasis on ethical considerations, ensuring that data analytics benefits society without compromising individual rights.

As we embrace these trends, it's essential to keep an eye on the skills required for future researchers. The demand for professionals who can navigate the intersection of data science and specific research fields is on the rise. Educational institutions are starting to adapt their curricula to prepare the next generation of researchers, blending traditional research training with data analytics skills. This will equip them to tackle complex questions with the aid of sophisticated analytical tools.

In summary, the future of data analytics in research is bright and full of possibilities. From AI-driven insights to real-time data processing, the tools and methodologies available to researchers are evolving at an unprecedented pace. As we continue to explore these advancements, one thing is clear: the ability to harness data analytics effectively will define the success of future research endeavors.

- What are the key technologies shaping the future of data analytics in research?

Key technologies include artificial intelligence, machine learning, big data, and cloud computing. These tools are enabling researchers to analyze large datasets more efficiently and derive meaningful insights. - How does real-time data analytics benefit researchers?

Real-time data analytics allows researchers to make timely decisions and adapt their methodologies based on ongoing findings, which is particularly critical in fields like healthcare. - What ethical considerations should researchers keep in mind when using data analytics?

Researchers must prioritize data privacy, comply with legal regulations, and maintain transparency in data collection and usage to ensure ethical practices.

Frequently Asked Questions

- What is data analytics and how is it used in research?

Data analytics refers to the systematic computational analysis of data. In research, it is used to interpret complex datasets, derive insights, and make informed decisions. By leveraging data analytics, researchers can identify patterns, predict outcomes, and enhance the overall quality of their findings.

- How has data analytics evolved over time?

Data analytics has evolved significantly from simple statistical methods to advanced techniques involving machine learning and artificial intelligence. Initially, researchers relied on basic data analysis tools, but as technology progressed, so did the capabilities of data analytics, making it an essential component of modern research methodologies.

- What are some key technologies driving data analytics?

Key technologies include machine learning, artificial intelligence, and big data frameworks. These technologies enable researchers to analyze vast amounts of data more efficiently and accurately, leading to deeper insights and more robust conclusions.

- What are machine learning applications in research?

Machine learning algorithms are used in research to predict outcomes, identify hidden patterns, and enhance data analysis processes. This application allows researchers to draw more informed conclusions and make groundbreaking discoveries based on their data.

- How does predictive analytics benefit researchers?

Predictive analytics helps researchers anticipate trends and outcomes by analyzing historical data. This proactive approach enables them to make better decisions and adapt their research strategies based on data-driven insights.

- What role does big data play in research?

Big data plays a crucial role in research by providing access to large datasets that can reveal insights previously unattainable through traditional methods. Researchers can uncover trends and correlations that enhance their understanding of complex phenomena.

- What challenges do researchers face with data analytics?

Common challenges include data privacy concerns, ensuring data quality, and the need for specialized skills. Researchers must navigate these issues to effectively implement data analytics in their work.

- Why is data privacy important in research?

Data privacy is vital to protect sensitive information and maintain the trust of participants. Researchers must adhere to legal regulations and ethical standards to ensure that data is handled responsibly and securely.

- How can researchers ensure data quality?

To ensure data quality, researchers should follow best practices for data collection, cleaning, and management. This includes validating data sources, removing duplicates, and regularly updating datasets to maintain integrity and reliability.

- What are the future trends in data analytics for research?

Future trends include advancements in technology, such as improved machine learning algorithms and data visualization tools, as well as evolving methodologies that will continue to shape how research is conducted and interpreted.